Configuring KeepAlive

TCPKeepAlive yes

I noticed this commented out in the server SSH config, does that kinda do the same thing as the the two keep alive commands you recommended?

ChatGPT 5.2 said:

It’s related, but it’s not the same thing, and it usually doesn’t solve the Starlink/NAT-idle “broken pipe” problem by itself.

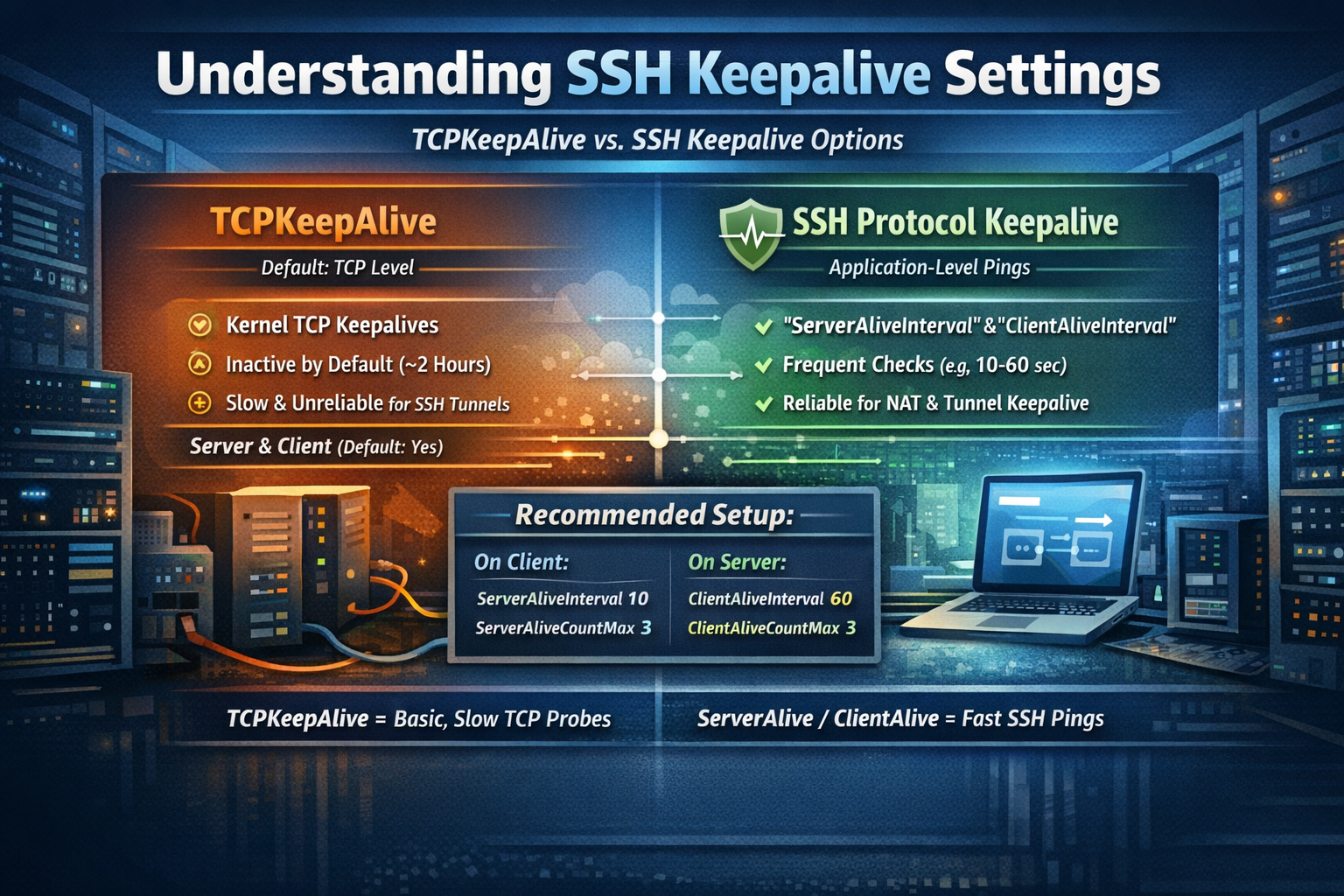

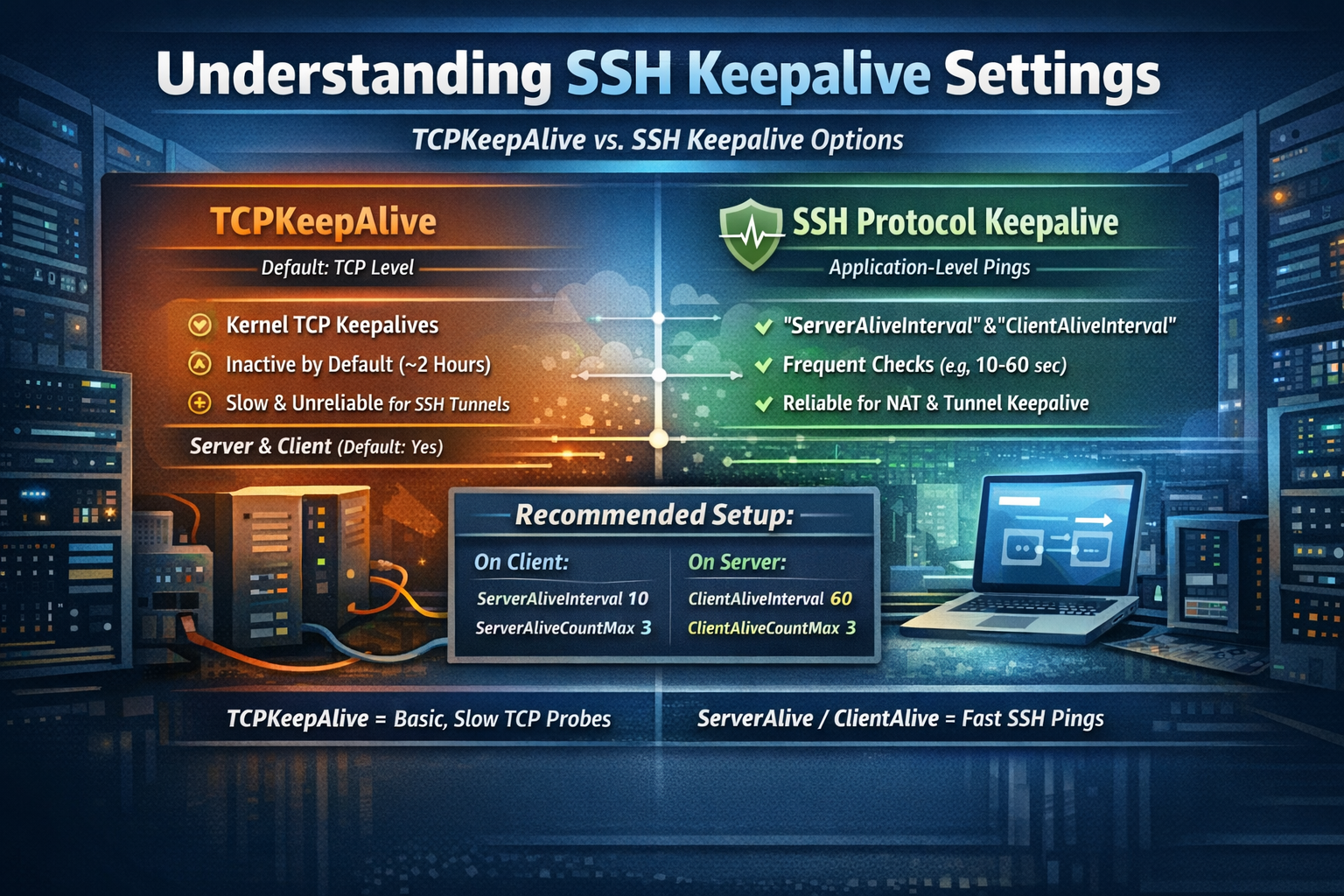

What TCPKeepAlive yes actually does

- This enables TCP keepalive probes at the kernel level (SO_KEEPALIVE).

- They’re meant to detect a dead peer (e.g., link died) eventually.

- The catch: on Linux the default timers are very long (often ~2 hours before the first probe, then spaced out). So it may not fire soon enough to keep an idle SSH session alive through NAT, CGNAT, Wi-Fi sleep, or Starlink path changes.

What the two keepalive settings I recommended do

There are two different “keepalive” families, and both are more useful for your case because they can be made frequent:

1) Server-side (in sshd_config)

ClientAliveInterval/ClientAliveCountMax- This makes sshd send an application-level keepalive to the client every N seconds.

- Great for keeping state alive through NAT and for detecting stuck sessions.

Example:

ClientAliveInterval 30

ClientAliveCountMax 3(That’s “ping every 30s; drop after ~90s of no response.”)

2) Client-side (in your ssh_config or command line)

ServerAliveInterval/ServerAliveCountMax- This makes the client send an SSH-level keepalive to the server.

Example:

ServerAliveInterval 30

ServerAliveCountMax 3So should you enable TCPKeepAlive?

Usually you can just leave it at the default (yes). It doesn’t hurt, but it’s not the tool that reliably prevents early idle disconnects on flaky/CGNAT-ish networks.

Best practical combo for your situation:

- On the server: set

ClientAliveInterval 30andClientAliveCountMax 3 - On the client: set

ServerAliveInterval 30andServerAliveCountMax 3 - Leave

TCPKeepAlive yesas-is.

If you tell me whether your “broken pipe” happens mostly when idle vs while actively typing/streaming output, I’ll point you to the next most likely cause (idle/NAT vs roaming/satellite path change vs wifi power saving).

You said:

Ok, I added the two lines in the SSH config adam@mcwai:~$ sudo systemctl restart ssh Job for ssh.service failed because the control process exited with error code. See "systemctl status ssh.service" and "journalctl -xeu ssh.service" for details.

Good catch and don’t log out of your current SSH session while we fix this. When ssh.service won’t restart right after editing config, it’s almost always a syntax error or an invalid directive in /etc/ssh/sshd_config.

1) Get the exact error (this usually tells you the line number)

Run:

sudo sshd -tIf it prints something like:

Bad configuration option: ServerAliveIntervalUnsupported option ...line XX: ...

…that’s the smoking gun.

Then also grab the service logs (more context):

sudo systemctl status ssh.service --no-pager -l

sudo journalctl -xeu ssh.service --no-pager | tail -n 802) Most common cause (and the fast fix)

If you accidentally put client-side options (like ServerAliveInterval) into sshd_config, sshd will refuse to start.

Server-side file: /etc/ssh/sshd_config should use:

ClientAliveInterval 30

ClientAliveCountMax 3Client-side file: ~/.ssh/config (or /etc/ssh/ssh_config) uses:

ServerAliveInterval 30

ServerAliveCountMax 33) Show me the lines you added (with line numbers)

This prints only keepalive-related lines + line numbers:

sudo grep -nE 'Alive|KeepAlive' /etc/ssh/sshd_configIf you want to see the exact surrounding area with line numbers:

sudo nl -ba /etc/ssh/sshd_config | sed -n '1,200p'4) Quick “get SSH back up” revert method

If you just want to restore service immediately, comment out the two lines you added, then test + restart:

sudo sshd -t && sudo systemctl restart ssh(After you comment them out.)

If you paste the output of sudo sshd -t (just that one line/error), I can tell you exactly what to change in one shot.

You said:

actually I sent the interval at 33, but I wouldn't think that would cause an issue

adam@mcwai:~$ sudo sshd -t

/etc/ssh/sshd_config: line 14: Bad configuration option: ServerAliveInterval

/etc/ssh/sshd_config: line 15: Bad configuration option: ServerAliveCountMax

/etc/ssh/sshd_config: terminating, 2 bad configuration options

adam@mcwai:~$ sudo systemctl status ssh.service --no-pager -l

sudo journalctl -xeu ssh.service --no-pager | tail -n 80

× ssh.service - OpenBSD Secure Shell server

Loaded: loaded (/usr/lib/systemd/system/ssh.service; disabled; preset: enabled)

Active: failed (Result: exit-code) since Mon 2026-02-16 01:09:09 EST; 1min 38s ago

Duration: 1w 10h 11min 9.130s

TriggeredBy: × ssh.socket

Docs: man:sshd(8)

man:sshd_config(5)

Process: 1327306 ExecStartPre=/usr/sbin/sshd -t (code=exited, status=255/EXCEPTION)

CPU: 4ms

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Scheduled restart job, restart counter is at 5.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Start request repeated too quickly.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Failed with result 'exit-code'.

Feb 16 01:09:09 mcwai systemd[1]: Failed to start ssh.service - OpenBSD Secure Shell server.

░░

░░ An ExecStartPre= process belonging to unit ssh.service has exited.

░░

░░ The process' exit code is 'exited' and its exit status is 255.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit ssh.service has entered the 'failed' state with result 'exit-code'.

Feb 16 01:09:09 mcwai systemd[1]: Failed to start ssh.service - OpenBSD Secure Shell server.

░░ Subject: A start job for unit ssh.service has failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit ssh.service has finished with a failure.

░░

░░ The job identifier is 118396 and the job result is failed.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Scheduled restart job, restart counter is at 4.

░░ Subject: Automatic restarting of a unit has been scheduled

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ Automatic restarting of the unit ssh.service has been scheduled, as the result for

░░ the configured Restart= setting for the unit.

Feb 16 01:09:09 mcwai systemd[1]: Starting ssh.service - OpenBSD Secure Shell server...

░░ Subject: A start job for unit ssh.service has begun execution

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit ssh.service has begun execution.

░░

░░ The job identifier is 118501.

Feb 16 01:09:09 mcwai sshd[1327306]: /etc/ssh/sshd_config: line 14: Bad configuration option: ServerAliveInterval

Feb 16 01:09:09 mcwai sshd[1327306]: /etc/ssh/sshd_config: line 15: Bad configuration option: ServerAliveCountMax

Feb 16 01:09:09 mcwai sshd[1327306]: /etc/ssh/sshd_config: terminating, 2 bad configuration options

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Control process exited, code=exited, status=255/EXCEPTION

░░ Subject: Unit process exited

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ An ExecStartPre= process belonging to unit ssh.service has exited.

░░

░░ The process' exit code is 'exited' and its exit status is 255.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit ssh.service has entered the 'failed' state with result 'exit-code'.

Feb 16 01:09:09 mcwai systemd[1]: Failed to start ssh.service - OpenBSD Secure Shell server.

░░ Subject: A start job for unit ssh.service has failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit ssh.service has finished with a failure.

░░

░░ The job identifier is 118501 and the job result is failed.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Scheduled restart job, restart counter is at 5.

░░ Subject: Automatic restarting of a unit has been scheduled

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ Automatic restarting of the unit ssh.service has been scheduled, as the result for

░░ the configured Restart= setting for the unit.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Start request repeated too quickly.

Feb 16 01:09:09 mcwai systemd[1]: ssh.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit ssh.service has entered the 'failed' state with result 'exit-code'.

Feb 16 01:09:09 mcwai systemd[1]: Failed to start ssh.service - OpenBSD Secure Shell server.

░░ Subject: A start job for unit ssh.service has failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit ssh.service has finished with a failure.

░░

░░ The job identifier is 118606 and the job result is failed.

ChatGPT said:

Yep — the interval value (33) is fine. The problem is simpler:

What happened

You put client-side keepalive options into the server config.

ServerAliveIntervalandServerAliveCountMaxbelong in the SSH client config (ssh_config/~/.ssh/config)- The SSH server (

sshd) uses ClientAliveInterval and ClientAliveCountMax

That’s why sshd -t is failing and ssh.service won’t start.

Fix it right now (safe + exact)

1) Edit the server config and replace those two lines

sudo nano /etc/ssh/sshd_configGo to line 14 and 15 and change:

ServerAliveInterval 33

ServerAliveCountMax 3to:

ClientAliveInterval 33

ClientAliveCountMax 3Save and exit.

2) Test config (must be clean)

sudo sshd -tIf this prints nothing, you’re good.

3) Restart SSH

sudo systemctl restart sshAbout TCPKeepAlive yes

Not the same thing.

TCPKeepAlive= kernel-level TCP keepalive probes (slow by default; mainly detects dead peers eventually)ClientAliveInterval/CountMax= sshd application-level keepalives (more reliable for NAT/timeouts, and you control timing)

So keep TCPKeepAlive yes if you want, but the two you meant to add on the server are:

ClientAliveInterval 33

ClientAliveCountMax 3If you also want the client-side version (optional)

On your laptop (adam@laptop), you can add this to ~/.ssh/config:

Host my-conversations-with-ai

ServerAliveInterval 33

ServerAliveCountMax 3That keeps the client sending probes too.

If you paste your first ~25 lines of /etc/ssh/sshd_config (with line numbers), I’ll sanity-check there aren’t any other landmines—but the error you posted is 100% explained by those two directives.

You said:

oh ok, I changed it to Client, but I see this

adam@mcwai:~$ sudo sshd -t

Missing privilege separation directory: /run/sshd

ChatGPT said:

Good catch — that message means sshd can’t find /run/sshd, which it uses at runtime for privilege separation. It’s usually created automatically by systemd, but since sshd is failing early (and /run is tmpfs), it may not exist right now.

Do this:

1) Create it (owned by root, safe perms)

sudo mkdir -p /run/sshd

sudo chmod 0755 /run/sshd

sudo chown root:root /run/sshd2) Re-test config

sudo sshd -t3) Start/restart ssh

sudo systemctl restart ssh

sudo systemctl status ssh --no-pager -lIf it comes back after reboot (make it persistent via systemd tmpfiles)

On Ubuntu/Debian, /run is recreated on boot, so the right long-term fix is usually tmpfiles:

printf "d /run/sshd 0755 root root -\n" | sudo tee /etc/tmpfiles.d/sshd.conf

sudo systemd-tmpfiles --create /etc/tmpfiles.d/sshd.confThen:

sudo systemctl restart sshIf sshd -t still complains after that, paste the output of:

ls -ld /run /run/sshd

sudo systemctl cat ssh.service | sed -n '1,120p'

sudo journalctl -xeu ssh.service --no-pager | tail -n 80You said:

if my laptop has this TCPKeepAlive yes then why not uncomment the same line on the server? you said to leave it as is.

Host yourserver

HostName 178.156.185.220

ServerAliveInterval 10

ServerAliveCountMax 3

TCPKeepAlive yes

ChatGPT said:

Two key points:

- Leaving

TCPKeepAlivecommented insshd_configdoes not mean it’s “off.”

In OpenSSH,TCPKeepAlivedefaults to yes on both client and server. So:

#TCPKeepAlive yes(commented) ➜ still behaves like yesTCPKeepAlive yes(uncommented) ➜ same behavior, just explicitTCPKeepAlive no➜ changes behavior (turns it off)

So on the server, uncommenting TCPKeepAlive yes usually changes nothing.

TCPKeepAliveis not the same thing asServerAliveInterval/CountMax.

They operate at different layers:

TCPKeepAlive= kernel-level TCP keepalives (SO_KEEPALIVE).

On Linux these are typically very slow by default (often ~2 hours before the first probe), and they’re mainly for detecting dead peers eventually. They do not reliably keep NAT/firewall state alive in the way you want for SSH sessions/tunnels.ServerAliveInterval+ServerAliveCountMax= SSH-protocol keepalives (application-layer).

These are frequent, encrypted “are you there?” checks that work much better for keeping NAT mappings alive and detecting broken links quickly.

That’s why I told you to use the SSH-layer keepalives (ServerAlive* on the client, and if you want the server side equivalent, use ClientAliveInterval / ClientAliveCountMax in sshd_config).

Practical rule

- Keep your client block like you have it (good for NAT/tunnels):

ServerAliveInterval 10

ServerAliveCountMax 3- On the server, don’t bother uncommenting

TCPKeepAlive yesbecause it’s already effectively on by default. - If you want server-side “active” keepalives, set:

ClientAliveInterval 60

ClientAliveCountMax 3

(Adjust if you want it more aggressive.)

So: your laptop having TCPKeepAlive yes doesn’t mean you need to explicitly set it on the server — and it’s not a replacement for the ServerAlive* behavior you were aiming for.

You said:

make an cool looking infographic for my blog about this keepalive we've been discussing